I’m not sure I even wrote on my personal blog when we raised our A round — but here we are, about four and a half years after we founded the company, having just announced we closed our $16M B round!

The official Luma Health funding announcement blog has a lot more interesting information about the round itself. You can check out a few of the articles that have already crossed the wire, such as this one by VentureBeat or this one by HIT Consultant. I wanted my personal blog to be more of a look behind the scenes of the three parts that came together to make today happen.

On a day like today, a company is looking to do a lot of things at once to try to make noise and drive the hype — and we were no exception. Earlier in the year, our Marketing team had kicked off an effort to do a total brand overhaul, and so when we were in the process of closing Series B funding, we decided to line up the efforts of the B announcement with the brand refresh (full details on the Luma brand design blog post), and launch them at the same time so we have that the ideal 1+1=3 punch.

Doing a brand refresh is no small feat as it has a habit to spider across all parts of the organization. You have to update sales collateral, landing pages, slide decks, event booths, social pages, etc, etc — the list goes on and on. But perhaps most importantly, a brand refresh also means you’re going to be updating your entire product to match the new brand.

At Luma, we have a centralized Design team run by our Design Director. They’re responsible for all aspects of design, be it product UX/UI, brand marketing, event collateral, etc. Right or wrong, many companies will have design functions within each functional team (e.g. product designers in the VP Product org, marketing designers in the VP Marketing org, etc), but we made a choice early on to centralized design in one team that’s shared across the company.

So, we had three trains moving at the same time — press releases and media for the B announcement, new marketing website for the brand overhaul, and a new product website refresh to match. Coordinating the Lichtenstein-esque look and feel vibe throughout all the touchpoints was the responsibility of the Design team and coordinating and driving the overall projects was the responsible of the Marketing team.

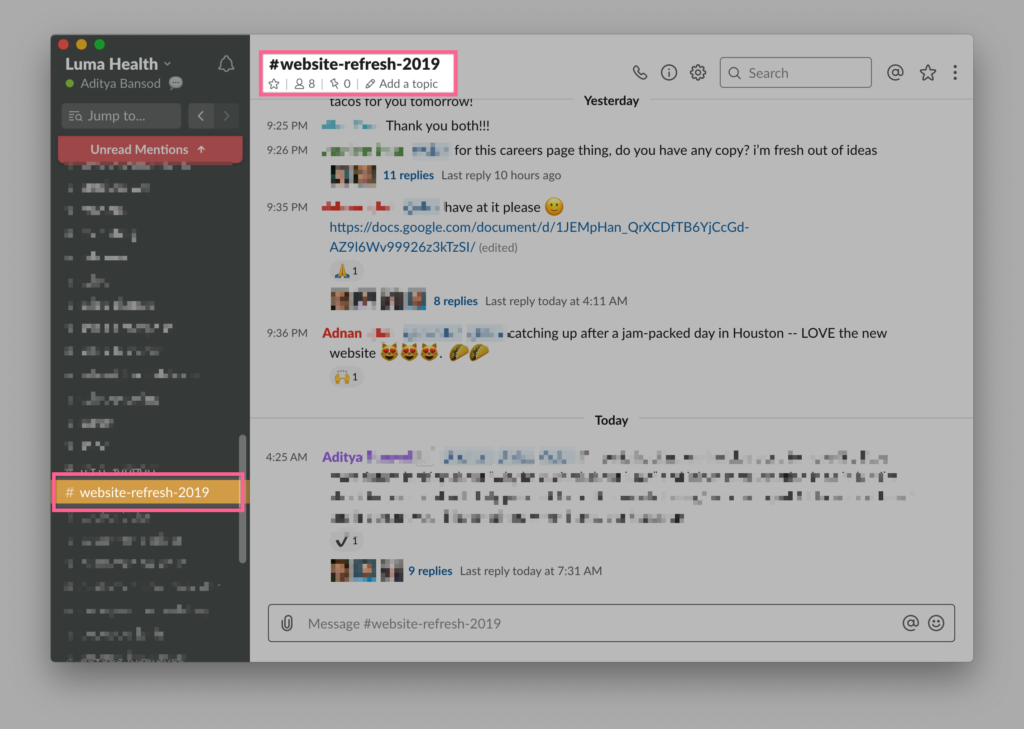

The main “hard part” doing something like this is making sure all the trains arrive when you want them to, and part of that is deciding that they don’t all need to get to the station at the same time. To coordinate all the pieces, we use (of course) used Slack. We’re a zero-email company so the entire project ran through a Slack channel called #website-refresh-2019.

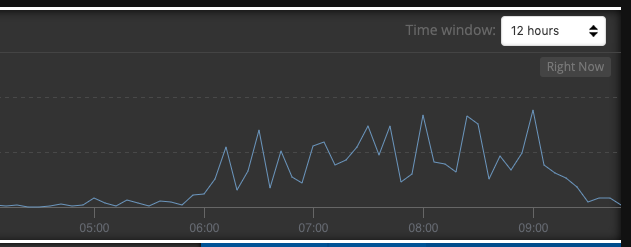

We launched the product updates at Monday 8/26 4:30PM PT, launched the marketing website at Monday 8/26 5:00PM PT, and then launched the press releases and cleared the news embargo at Tuesday 8/27 5:00AM PT. And like that, new website, new brand look, new product look and feel, all in support of the future growth of Luma Health, fueled by our $16M raise.